The new AI assistant will help helicopter pilots to perform tasks within and beyond their skillsets.

The Defense Advanced Research Projects Agency (DARPA) awarded Northrop Grumman a contract to develop a prototype Artificial Intelligence (AI) assistant that will be integrated in the UH-60 Black Hawk as part of the agency’s Perceptually-enabled Task Guidance (PTG) program. The prototype will be embedded in an augmented reality (AR) headset mounted on the helmet to help helicopter pilots perform both expected and unexpected complex tasks.

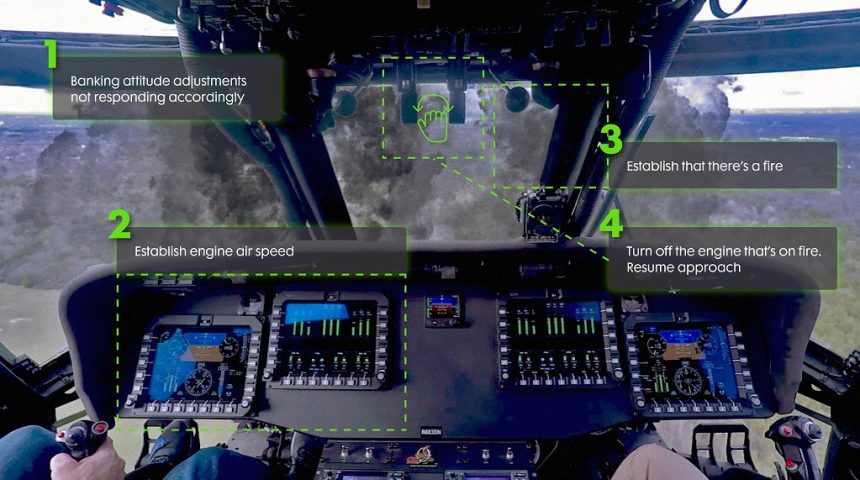

Northrop Grumman is working on this project, which has been named Operator and Context Adaptive Reasoning Intuitive Assistant (OCARINA), in partnership with the University of Central Florida (UCF). The assistant will support UH-60 Blackhawk pilots during both visual and instrumented flight. “The goal of this prototype is to broaden a pilot’s skillset,” said Erin Cherry, senior autonomy program manager at Northrop Grumman. “It will help teach new tasks, aide in the recognition and reduction of errors, improve task completion time, and most importantly, help to prevent catastrophic events.”

Rotorcraft aircrews face numerous demands particularly when flying in close proximity to buildings, terrain, people and from the threat of adversary radar systems. Today, helicopters are generally equipped with simple warning systems which are the most common means for aiding a rotorcraft aircrew, such as auditory alerts to increase altitude or warning lights to signals a multitude of different problems. These warning systems however are limiting and can induce unanticipated cognitive burdens on pilots, with studies showing that inattentional blindness to such warnings can occur and can often make them ineffective for the aircrew.

Nowadays, military personnel are expected to perform an increasing number of tasks which are more complex than ever before. Mechanics, for example, are asked to repair more types of increasingly sophisticated machines and platforms, and Medics are asked to perform more procedures over extended periods of time. The goal of the PTG program is to make users more versatile by expanding their skillset and more proficient by reducing their errors.

“Increasingly we seek to develop technologies that make AI a true, collaborative partner with humans,” said Bruce Draper, a program manager at DARPA’s information innovation office. “Developing virtual assistants that can provide substantial aid to human users as they complete tasks will require advances across a number of machine learning and AI technology focus areas, including knowledge acquisition and reasoning.”

Here is how DARPA describes the program on its website:

“The Perceptually-enabled Task Guidance (PTG) program aims to develop artificial intelligence (AI) technologies to help users perform complex physical tasks while making them more versatile by expanding their skillset and more proficient by reducing their errors. PTG seeks to develop methods, techniques, and technology for artificially intelligent assistants that provide just-in-time visual and audio feedback to help with task execution.

The goal is to provide users of PTG assistants with wearable sensors (head-mounted cameras and microphones) that allow the assistant to see what they see and hear and what they hear, and augmented reality (AR) headsets that allow assistants to provide feedback through speech and aligned graphics.

The target assistants will learn about tasks relevant to the user by ingesting knowledge from checklists, illustrated manuals, training videos, and other sources of information. They will then combine this task knowledge with a perceptual model of the environment to support mixed-initiative and task-focused user dialogs. The dialogs will assist a user in completing a task, identifying and correcting an error during a task, and instructing them through a new task, taking into consideration the user’s level of expertise.”

While Northrop Grumman’s project is focused on Black Hawk pilots, DARPA says the PTG program is aimed also at mechanics, medics, and other specialists so they can perform tasks within and beyond their skillsets. PTG is only meant to develop software-based AI technology, while sensors, computing hardware, and augmented reality headsets will use commercial-off-the-shelf (COTS) technology as development of new hardware is outside the scope of the program.

The PTG program is performing research in two Technical Areas (TAs). The first area (TA1) is for fundamental research to address a group of four interconnected key problems: knowledge transfer, perceptual grounding, perceptual attention, and user modeling. The second area (TA2) centers on integrated demonstrations of technologies derived from TA1 research on use case scenarios relevant to the military in one of three broad areas: mechanical repair (Mechanics), battlefield medicine (Medics), or pilot guidance (Pilots).

The four key problems are important for an effective AI assistant because knowledge transfer is needed for an assistant to automatically acquire task knowledge from checklists, manuals and training videos, while perceptual grounding allows the assistant to align sensor data with the terms used to describe tasks, so can observations be mapped back to task knowledge. At the same time, perceptual attention allows the assistant to pay attention only to events (both expected and unexpected) relevant to the task, in order to apply user modeling and determine how much information to present to the user and when to do so.

DARPA also described three general use cases.

In the first one, the user can initiate the interaction by asking what to do next and then receive instructions from the AI. In the second, the AI perform a “surveillance” of the user’s actions, issuing a warning and suggesting remedial action if the user makes a mistake. In the third one, the AI performs a teacher’s role, walking the user through the various steps of a new task.

Northrop Grumman has been working for long time to leading-edge Artificial Intelligence and Machine Learning solutions, developing and integrating them into large, complex, end-to-end mission systems thanks to powerful, proven algorithm development and implementation processes. The Department of Defense’s AI Strategy defines Artificial Intelligence and Machine Learning as “the ability of machines to perform tasks that normally require human intelligence,” enabling humans to make educated decisions by parsing through information and delivering relevant data at mission speed.

Because of this, secure and ethical AI principles, defined by the Defense Innovation Board and adopted by the Department of Defense and other customers, guide the design and development of AI systems and solutions. As mentioned by Northrop Grumman, AI technologies needs to be responsible, equitable, traceable, reliable, governable, auditable and protected against threats, considering not only the technical aspects, but also the legal, policy and social implications.